Google held their annual I/O developers conference today, and during the 2-hour event Google made one thing clear: They are fully embracing AI. Google revealed several updates and additional features to existing AI tech while also unveiling some brand-new capabilities. There was an all-out blitzkrieg of AI announcements today, but we’ll break down a few of our favorites.

Gemini Will Be Everywhere

If you walk away with one thing from this article, know this: Google Gemini is not going anywhere. In addition to it not going anywhere, Google intends to integrate Gemini into almost every aspect of its products and services that you know and love. Gmail, Google Maps, Google Chrome, Google Photos, and Android OS will all see significant upgrades to how Gemini is integrated into them.

For starters, Google announced Gemini 1.5 Pro, and with it comes a whole new host of functionality and features. For starters, Gemini Advanced will now be able to summarize documents faster and with more accuracy. You’ll soon be able to pull very specific details out of larger documents. Users will be able to quickly find the pet policy in a rental agreement, or compare the key arguments in a research paper.

On mobile and soon in Google Chrome, Gemini Nano will heighten users’ ability to access generative search and AI. Android users will see Gemini become more deeply integrated into the core functionality of their devices. Google Photos and Maps will be the primary benefactors of this increased capability.

Photos will gain larger contextual capability, in order to improve overall search accuracy. One example given was searching for inspiration for a birthday party. You’ll soon be able to ask Photos “What themes have we used for (insert child’s name here) birthday parties? Photos will understand the context of the question you’re asking, and comb through your library for pictures of birthday cakes, and decorations in the background and give you the answer.

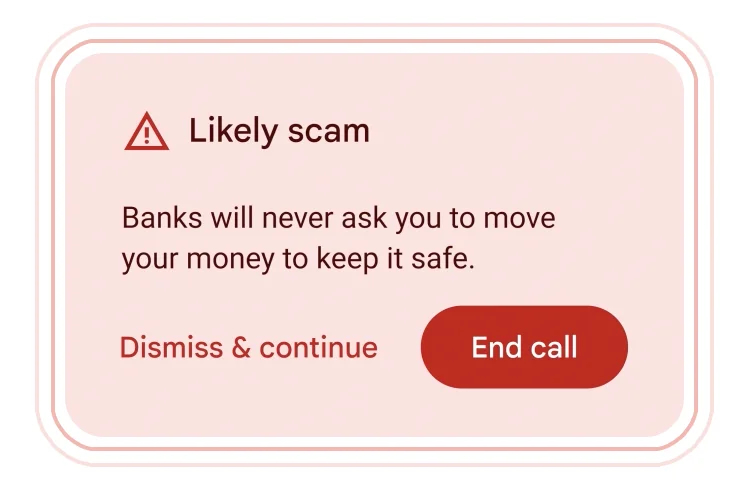

We’ll also see enhanced spam filtering and robocall protections in Android. Future versions of Gemini will be able to listen to your calls for context clues and dialogue similar to those commonly used in phishing scams. When Gemini detects unusual requests, callers will be alerted with a pop-up notification alerting them to the potential danger of proceeding with the call.

Google also announced a new feature called Gemini Live, which will bring conversational capability to the AI model. Gemini Live is a new conversational experience that uses Google’s state-of-the-art speech recognition tech to make speaking with Gemini more intuitive. Gemini will allow you to choose from a variety of “natural sounding” voices and will flow as close to a real conversation as possible. You’ll even be able to interrupt Gemini mid-response like you would in an actual discussion, to ask more clarifying questions. Users will see this feature first within the Google Messages app, and then eventually as a standalone feature in Gemini Advanced.

Finally, Gemini will allow users to create “Gems” or customized versions of Gemini. These are subsections of Gemini that can act as anything you can think of – within reason of course. Your Gem can be a Sous Chef, a creative writing coach, a coding partner, or a gym buddy. You simply create what your Gems function will be and how you want it to respond. Think of a basic version of Samantha from ‘Her’minus the whole sentient stuff (for now at least).

While it’s clear that Google is fully committed to the AI experience across its ecosystem, it still lags behind ChatGPT in terms of accuracy and capability. The interesting piece here is the fact that a lot of these features will be integrated directly across Google services and into Android devices, which in turn will make them more easily accessible.

There were tons of other announcements during I/O, and for the entire list check out Google’s I/O blog wrap-up.